The gist of pitch shifting seems easy enough to grasp. But how does time stretching work? Any of you ever look into it?

At my age it’s an imperative

I’m hearing that already.

Apparently it’s one of the more difficult programming tasks, Reaper uses Elastique which is very good, and Paul Davis developer of Ardour DAW has said it’s too much downtime for the Ardour devs to attempt something as good as Elastique.

It looks like it can be done in the time domain or the frequency domain. There is a manual demonstration of basic time stretching in the time domain here:

Classic Time stretch - The Science Explained

Music Production Tutorials

https://www.youtube.com/watch?v=pQKEDUhrncgI have no idea how this would work in the frequency domain. Something phase vocoder, something else, blah.

The one thing I never use time stretching for is to speed up guitar solos, as any audio stretching degrades the audio at least a little bit. I have on rare occasions slightly sped up or slowed down a section that has solos though, there’s a song I did called On Through the Night where I slowed the outro section, printed it, had a computer crash, then sped it up again afterwards, not best practise.

Still reading here and there up to how time stretching works in the frequency domain.

I know jack about signals and systems, having never studied that topic. So I don’t yet know why this is the way it is. But apparently in the world of signals and systems, time shifting works in the opposite way in which a layman might expect. Say you have some signal x[n], where n is a sample number and [ ] is notation used for a discrete signal, as opposed to the notation x(t) for a continuous signal. If you want to shift the signal to the right, the operation is x[n - k], rather than x[n + k]. And if you want to shift the signal to the left, the operation is x[n + k]. Again, why this is, I have no clue yet.

I bring this point up because I have ran into this curious stumbling block multiple times while reading about fundamental dsp topics, such as convolution. I’m sure there is some reasonable explanation for it, but I never came across the explanation. Nor have I seen anyone point out the conflict of the notation to the result of the operation.

If you want to see an example, see here: http://www.dspguide.com/ch5/2.htm And search for the string: Pay particular notice to how the mathematics of this shift is written

And if someone here knows the reason for this notation, clue me in will ya?

My understanding was based on frequency domain thinking.

If all sounds are a sum of sine waves, then slowing down the rate of change of the level of those sine waves gives you the same mix of frequencies, but a slower rate of change of the level of those frequencies.

Or something. Never did pretend to understand it fully.

Interesting. Trying to wrap my noggin around that…

Maybe my rewording here is oversimplified. If you want to slow a signal, you do an FFT on the signal. For each window of the FFT, you create enough successive copies to achieve the desired time stretch, assembling them end to end, then onto the next window and so forth. While this is happening, the appropriate portion of the new desired rate of change (of amplitude) is being applied to each window (and copies), and an IFFT is being done to move the new signal back to the time domain.

Also, I think some windowing function is being applied just after acquisition to deal with partial sines causing aliasing (essentially a fade in and fade out of each window).

Does that jive with your understanding of it?

Having a play around in Reaper a bit with time stretching. If you stretch a piece of audio enough (say 1/4 speed), you can really hear the slices (or windows).

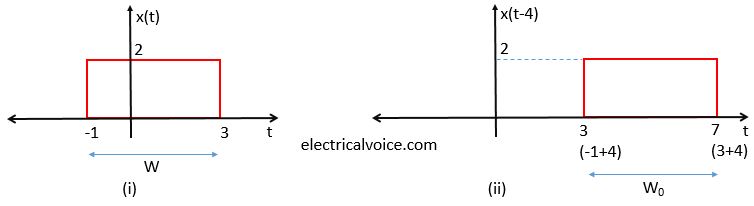

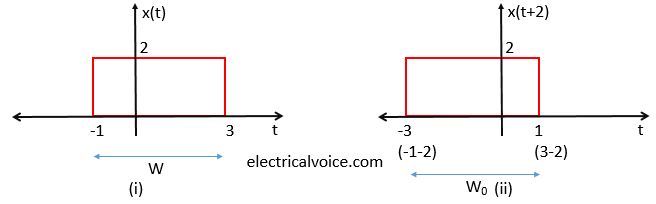

That odd thing with time shifting a signal is infuriating.  It doesn’t make any goddamn sense. Subtraction means addition and addition means subtraction. This is clearly shown in the graphs below. x(t-4) = (-1+4) and (3+4).

It doesn’t make any goddamn sense. Subtraction means addition and addition means subtraction. This is clearly shown in the graphs below. x(t-4) = (-1+4) and (3+4).

Source article: https://electricalvoice.com/time-shifting-time-scaling-time-reversal-of-signals/

It reminds me very much of the stupid thing to do with current in electronics, where the direction of current is taught the wrong way around in engineering, and the lame excuse given is that it has always been taught that way, so just get used to it.

I mean, seriously. Is this some sort of conspiracy to make a simple thing seem difficult to understand? Or what else could it be? For some reason this seems to just be accepted without explanation. If the gear markings in cars used Forward for going in reverse and Reverse for going forward, there would surely be enough people calling bullshit for it to get changed.

If you imagine that the axes of those graphs could be slid around independently of the red boxes, then the +/- makes sense. If you slide left you are subtracting a positional value from the original axis position, which adds the same amount onto anything plotting on the x axis.

Yea, that definitely could make sense. I never saw the timeline being pulled around like that.

It’s still slightly weird. There’s actual stuff and then there’s finding a way of formally describing it. Like somebody channeling lost souls via their guitar and then somebody else coming along and notating it with all the necessary hieroglyphics.

I’m sure there are better ways of describing a lot of things waiting to be discovered/created where we have existing clunky languages that do the job at the mo.